High Throughput Plant Phenotyping with Direct Georeferencing of Altum Imagery

The Project: increasing the value of data through Direct Georeferencing

In 2019, the University of Guelph’s programs in precision agriculture and soybean breeding collected small-plot data at the Elora Research Station, which is owned by the Agricultural Research Institute of Ontario and managed by the University of Guelph through the Ontario Agri-Food Innovation Alliance.

The Solution

Trimble APX-15 UAV Direct Georeferencing from Applanix

The highly advanced Differential GNSS-inertial solution with POSPac UAV post-processing software, used alongside the MicaSense Altum multispectral imaging system, provides high-accuracy georeferenced imagery.

Overview

Plant breeding programs collect and manage large volumes of data that have rigorous data quality requirements. Aerial imagery provides a high degree of spatial precision, but the georeferencing accuracy is typically low (~5 m horizontal accuracy), limiting its throughput and usefulness. However, high-accuracy georeferencing of geospatial data layers enables rapid integration of data collected on the ground with imagery collected from low altitude and can automate some processes that otherwise are conducted manually or with complex algorithms. This greatly reduces the workload necessary in order to analyse important phenotypic traits that are used in selecting the best genetics for seeds.

Direct Georeferencing (DG) of imaging sensors using Differential GNSS and inertial sensors is used to create highly efficient geospatial products without the use of Ground Control Points and traditional Aerial Triangulation. High spatial resolution and georeferencing accuracy make information extraction and advanced analytics possible in both spatial and temporal domains and at much greater efficiency than when collected from the ground.

Challenge: Delimiting Research Plot Boundaries

A high-accuracy spatial reference needs to be assigned to each plot entry in an experimental layout to enable accurate and efficient plot-level statistics of plant phenotypic attributes, such as maturity, light interception, and canopy colour. Repeatable, accurate knowledge of the plot boundaries must be known in the multi-spectral imagery collected from the UAV to eliminate errors in the statistics. While manual plot boundary delineation, pattern-based methods using field plans, and image-based methods using computer vision are currently used, each method can be time-consuming and be influenced by other limitations unique to its approach. The preferred, most efficient, and most accurate method is to use Directly Georeferenced imagery and correlate this with high accuracy RTK GNSS plot positions from the equipment used to do the planting.

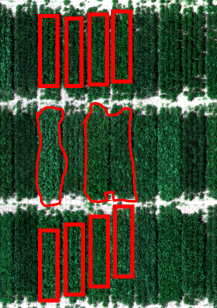

Delineating plot boundaries is one of the biggest bottlenecks encountered when using aerial imagery for high throughput phenotyping (HTP). The boundaries of individual plots need to be delimited in order to properly extract plot-level statistics from image data (see figure below).

|

|

|

|||

|

Chapman et al. 2014, Haghighattalab et al. 2016 |

|||||

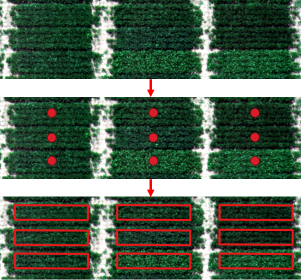

Fortunately, precision agriculture equipment can help streamline plot delineation. For instance, precision planting equipment can log the position of an integrated high-accuracy GPS antenna when it trips a planter to sow seed into a plot. GIS software can transform the point positions into polygons by simply specifying the plot dimensions and orientation1.

|

|

|

Bruce et al. 2019 |

|

However, the standard GPS used to record geographic position information into image metadata typically has horizontal spatial errors of ~5 meters and that makes it difficult to compare image sets across time or to spatially align a given image set with other geospatial layers such as the point positions where a planter is triggered. Furthermore, image collection typically requires ~70% across-track spacing of flight lines, which can significantly extend the duration of imaging missions. Fortunately, Direct Georeferencing tools, such as those offered by Applanix, can drastically reduce across-track spacing requirements (e.g. 10% across-track) and georeferencing error (e.g. 10 cm horizontal error). Such high-accuracy techniques help save time and reduce workloads in an image processing pipeline. For instance, when all layers are adequately georeferenced they will have consistently accurate spatial and temporal alignment. They coordinate field activities for audits, repairs, and data collection, then leverage the resulting data to help optimize their advanced metering systems.

|

Strengths

Weaknesses

|

|

Bruce et al. 2019 |

|

Solution

The Trimble APX-15 UAV Direct Georeferencing solution from Applanix is a highly advanced Differential GNSS-inertial solution developed specifically for UAV mapping applications. Complete with the POSPac UAV office and cloud-based post-processing software, it enables camera pixels and LiDAR points to be geolocated at the cm-level in a highly accurate and automated fashion. Great efficiencies are gained when used along with a multispectral imaging system, such as the MicaSense Altum, a next-generation high-resolution thermal, multispectral, and RGB compact camera designed for UAVs. It integrates a radiometric thermal camera with five high-resolution narrow bands, to produce fully calibrated and synchronized thermal & multi-spectral in a single flight for performing advanced analytics.

Results

The Applanix and MicaSense hardware were integrated on a DJI M600 with Ronin stabilized mount, taking care of the precise time alignment required to enable Direct Georeferencing. Results from a flight test over soybean plots at the Elora Research Station showed an accuracy of 6 cm RMSx,y Horizontal, and 11 cm RMS Vertical when compared to GCPs surveyed by the University. Centimetre-level plot boundaries that were previously surveyed were then overlaid on the Directly Georeferenced orthomosaic from the Altum camera showing alignment to within a few pixels.

The results demonstrate that the MicaSense Altum multi-spectral UAV camera integrated with the Trimble APX-15 EI UAV Direct Georeferencing solution can produce highly accurate, repeatable large-scale map products without the use of GCPs and are ideal for applications such as plant phenotyping. The benefits of such a solution include:

- better plot-level geometry for machine learning of phenotyping analysis, resulting in greater accuracy and efficiency

- lower cost by removing the laborious processing of having to redefine the plot boundaries for every flight throughout the season

- more accurate height and volume information leading to better biophysical attributes of the plant canopy

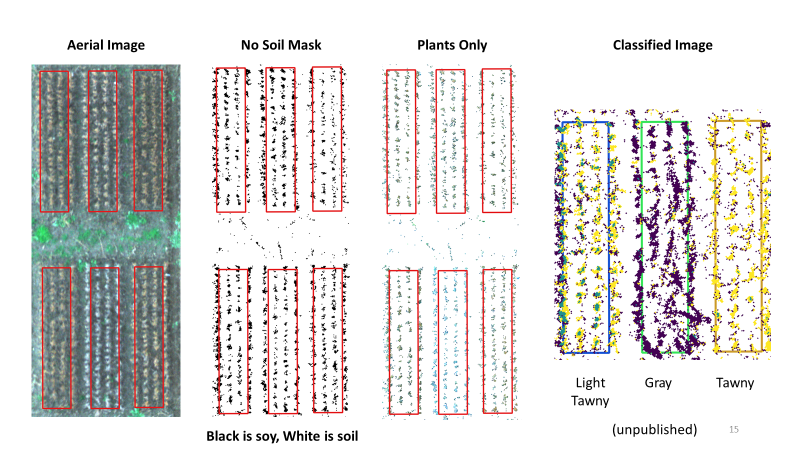

Classifying Soybean Trichome Colours

As soybeans mature, they have tiny hairs called trichomes that will exhibit different colour characteristics depending on the genetics of the variety. If such plot-level information can be automatically tabulated, such as by using machine learning techniques, then hand-scoring of thousands of plots can be replaced with more objective measures based on image characteristics.

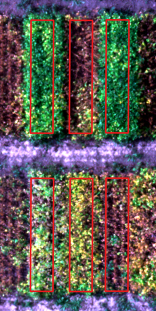

University of Guelph researchers Dr. Sulik, Dr. Bruce, and Dr. Rajcan are examining whether the MicaSense Altum, which measures light intensity in five discrete wavebands, can be used to differentiate subtle colour variations in leaf hairs (i.e. trichomes) that are visible during physiological maturity.

Initial results are promising, and further effort is necessary to determine whether Altum’s spectral resolution is enough to differentiate the subtle trichome colour differences.

Gray

Light Tawny

Tawny

Unpublished

1 Bruce, RW, Rajcan, I, Sulik, J. Plot extraction from aerial imagery: A precision agriculture approach. The Plant Phenome J. 2020; 3:e20000. https://doi.org/10.1002/ppj2.20000

Special thanks to:

|